Introduction

In the previous lesson, we have learned the advantages and disadvantages of docker. We also configured the lab environment and looked at a hello world docker example.

In this lesson, we are going to dig deeper into Docker, Docker images and its commands. We will also be doing a couple of tasks where you will be tinkering with docker rather than just copy-pasting the commands.

Before moving on with this lesson, let us first clarify one of the most commonly asked questions “what’s the difference between docker images and docker containers?”

Docker Image vs Docker Container

Docker Image is a template aka blueprint to create a running Docker container. Docker uses the information available in the Image to create (run) a container.

If you are familiar with programming, you can think of an image as a class and a container is an instance of that class.

An image is a static entity and sits on the disk as a tarball (zip file), whereas a container is a dynamic (running, not static) entity. So you can run multiple containers of the same image.

In short, a running form of an image is a container.

Let’s see both images and containers in action using Docker CLI

If you are our paid customer and taking any of our courses like CDE/CDP/CCSE/CCNSE, please use the browser based lab portal to do all the exercises and do not use the below Virtual Machine.

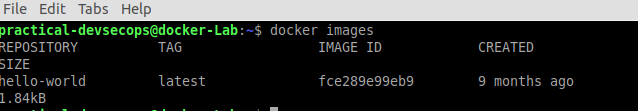

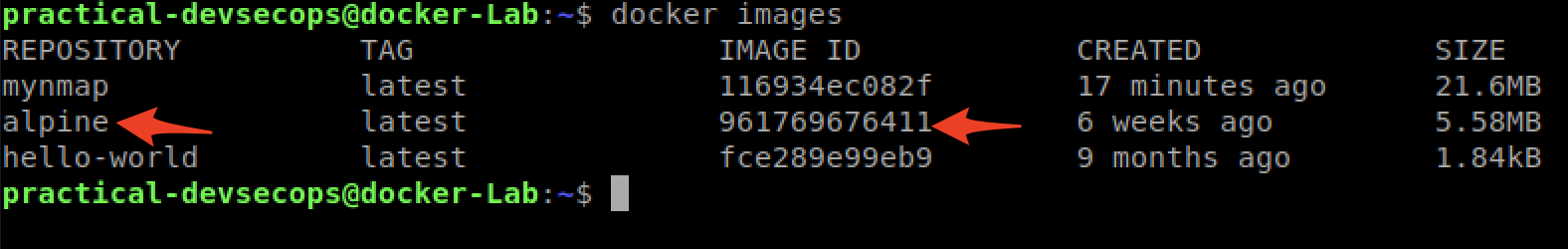

The below command lists images present in the local machine.

$ docker images

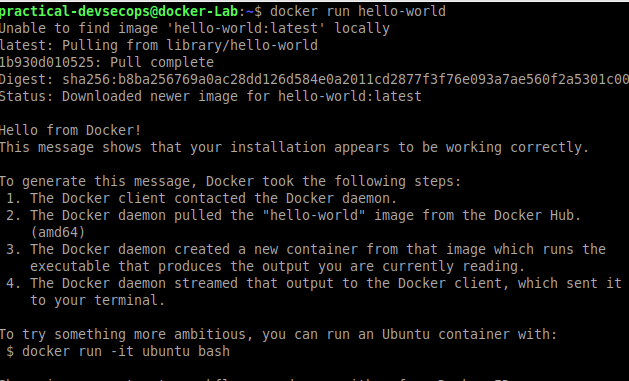

You can create a container from this image by using the docker run command.

$ docker run hello-world

In order to perform security assessments of the Docker ecosystem, you need to understand Docker images and containers in more detail.

Let’s explore these topics in depth.

Docker Image

We already know that the Docker image is a template used to create(run) a docker container but what it’s made of ? what it takes to create it? and what information is needed to turn an image into a container?

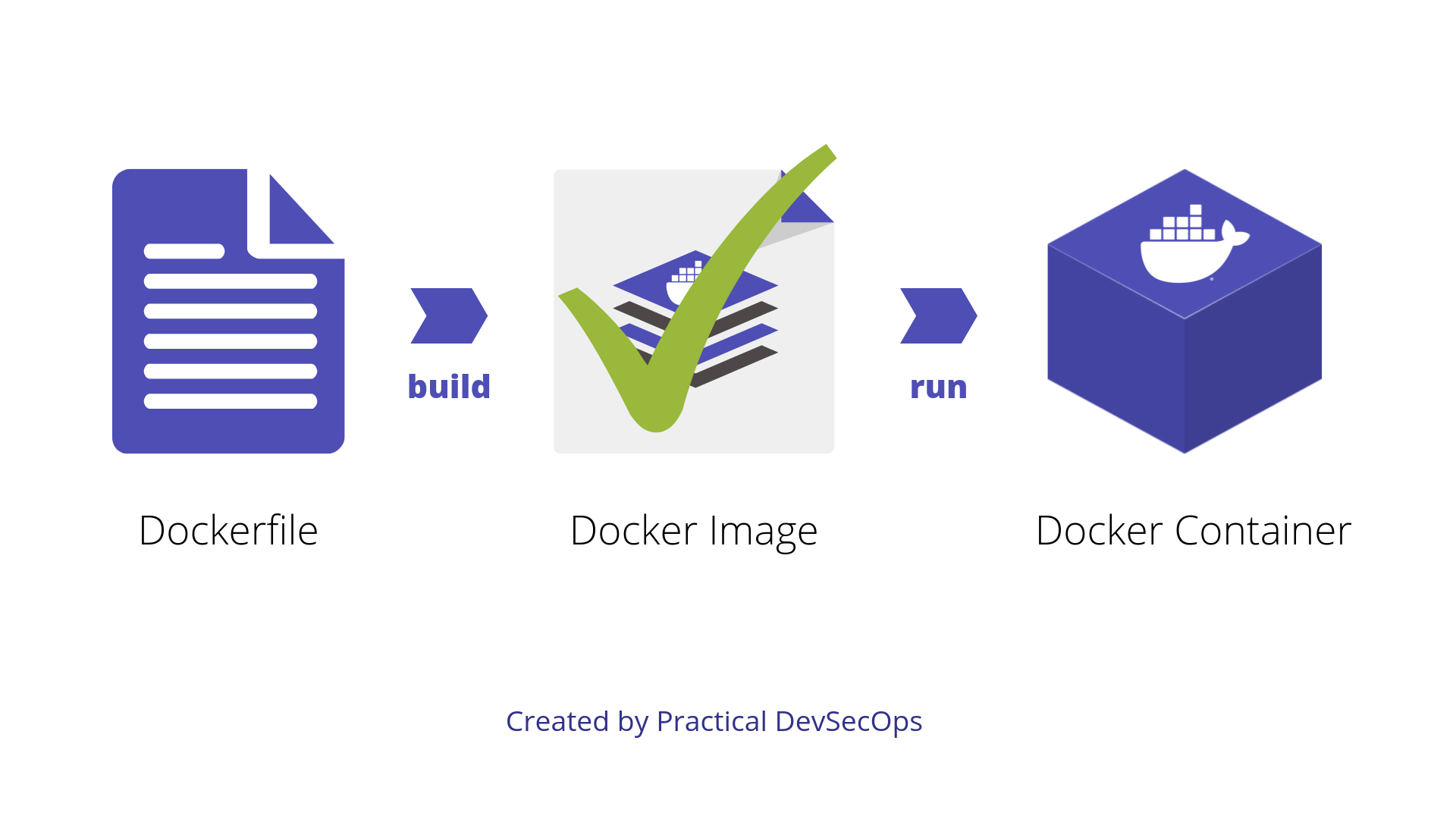

Dockerfile

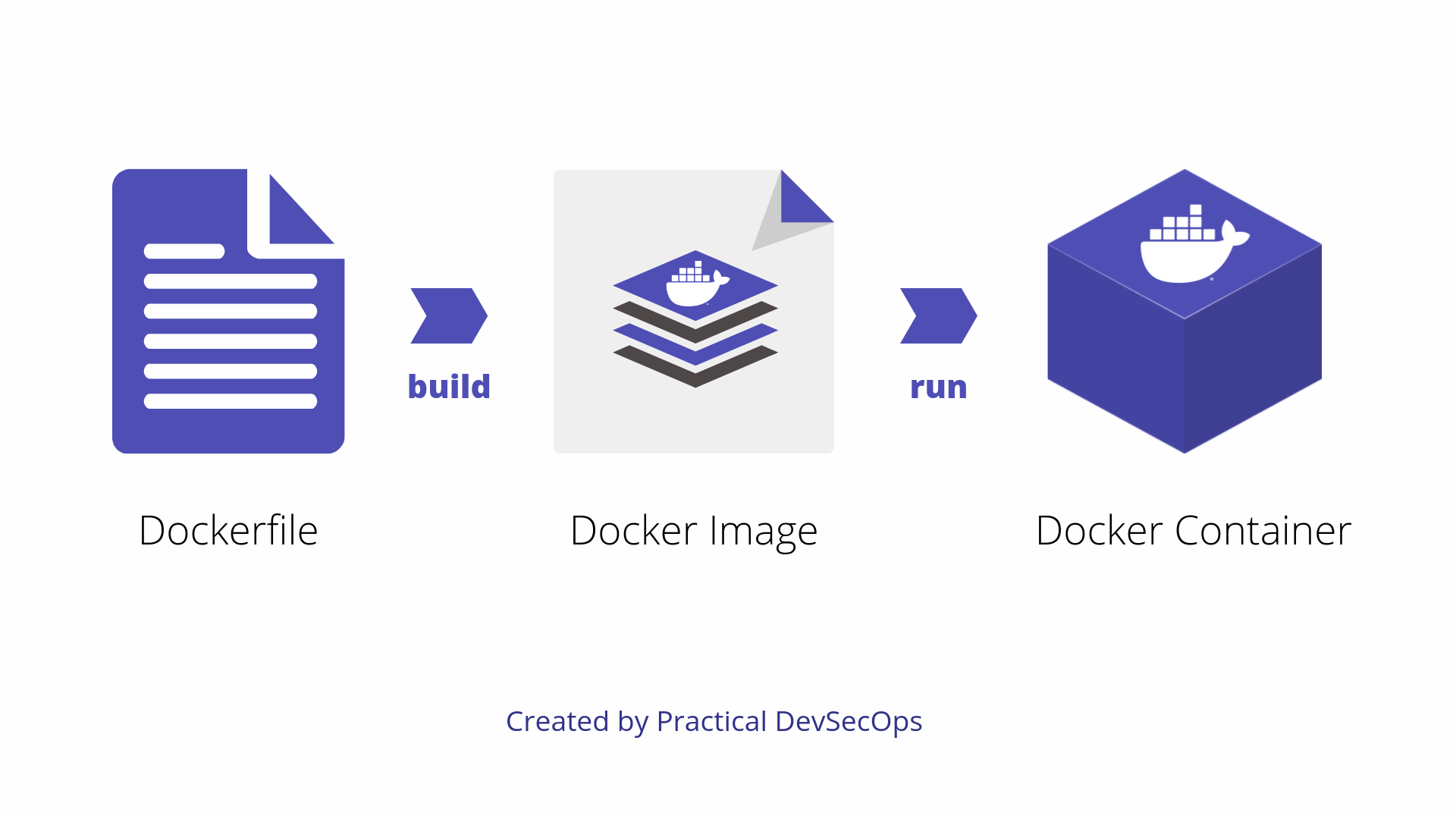

Docker images are usually created with the help of a Docker specific file called Dockerfile. Dockerfile contains step by step instructions to create an Image.

As we can see, a Dockerfile is used to create a Docker image, this image is then used for the creation of a Docker container.

A simple example of a Dockerfile is shown below.

# Dockerfile for creating a nmap alpine docker Image FROM alpine:latest RUN apk update RUN apk add nmap ENTRYPOINT ["nmap"] CMD ["localhost"]

Let’s explore what these Dockerfile instructions mean.

FROM: This instruction in the Dockerfile tells the daemon, which base image to use while creating our new Docker image. In the above example, we are using a very minimal OS image called alpine (just 5 MB of size). You can also replace it with Ubuntu, Fedora, Debian or any other OS image.

CMD: The CMD sets default command and/or parameters when a docker container runs. CMD can be overwritten from the command line via the docker run command.

ENTRYPOINT: The ENTRYPOINT instruction is used when you would like your container to run the same executable every time. Usually, ENTRYPOINT is used to specify the binary and CMD to provide parameters.

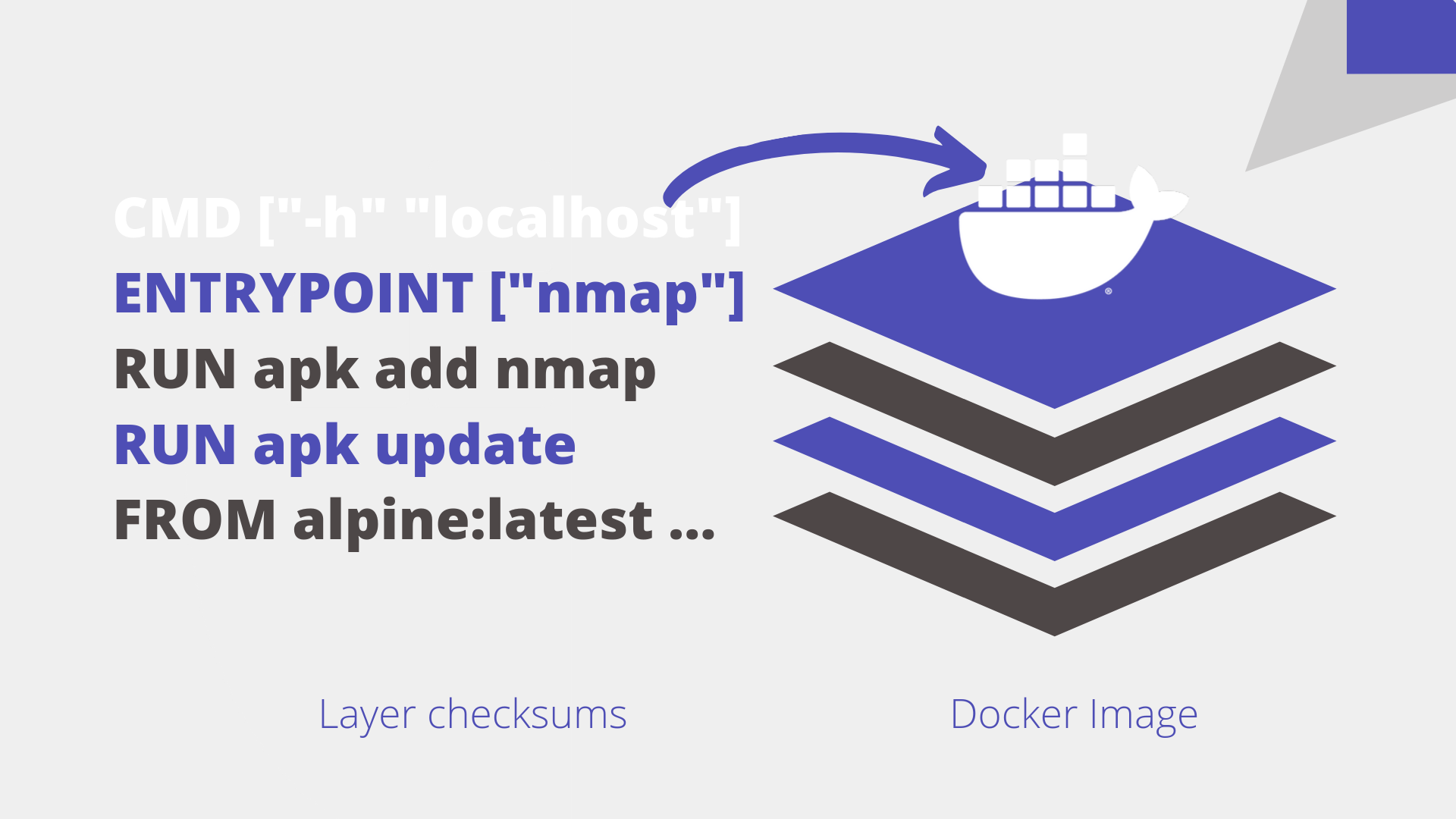

RUN: This command instructs the Docker daemon to run the given commands as it is while creating the image. A Dockerfile can have multiple RUN commands, each of these RUN commands create a new layer in the image.

For example, if you have the following Dockerfile.

# Dockerfile for creating a nmap alpine docker Image FROM alpine:latest RUN apk update RUN apk add nmap ENTRYPOINT ["nmap"] CMD ["-h", "localhost"]

The FROM instruction will create one layer, the first RUN command will create another layer, the second RUN command, one more layer and finally, CMD will create the last layer.

The below example shows the same above example on a ubuntu base image.

# Dockerfile for creating a nmap ubuntu docker Image FROM ubuntu:latest RUN apt-get update RUN apt-get install nmap -y ENTRYPOINT ["nmap"] CMD ["-h", "localhost"]

COPY: This command copies files from the host machine into the image.

ADD: The ADD command is similar to COPY but provides two more advantages.

It supports URLs so we can directly download files from the internet.

It also supports auto extraction.

WORKDIR: This command will specify on which directory the commands have to be executed. You could think of this as the cd command in *nix based operating systems

EXPOSE: The EXPOSE command exposes the container port.

Enough of theory, let’s build a simple docker-image which runs Nmap against localhost.

If you are our paid customer and taking any of our courses like CDE/CDP/CCSE/CCNSE, please use the browser based lab portal to do all the exercises and do not use the below Virtual Machine.

Docker Image creation using Dockerfile

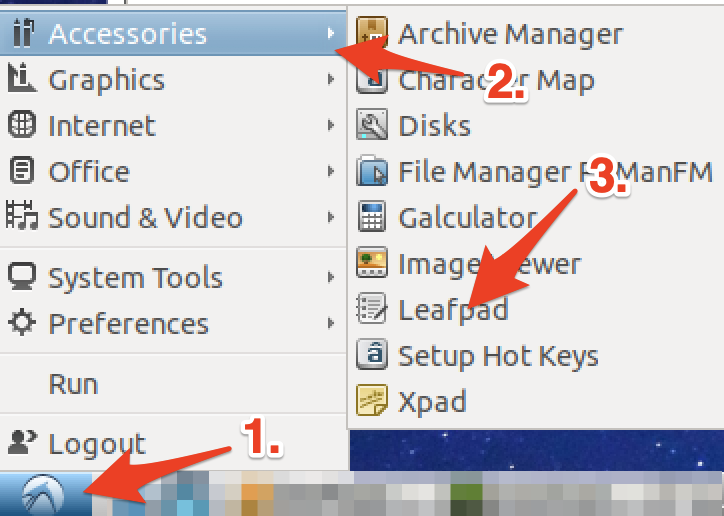

Start your VM and open the Leafpad text editor by going to Start Menu → Accessories → Leafpad and paste the below code.

# Dockerfile for creating a nmap alpine docker Image

FROM alpine:latest RUN apk update RUN apk add nmap ENTRYPOINT ["nmap"] CMD ["-h", "localhost"]

Save the file (File → Save) with the name Dockerfile with no extension.

Let’s use this Dockerfile to build the Nmap image.

Open the terminal and go to the directory where the Dockerfile exists then execute the following command.

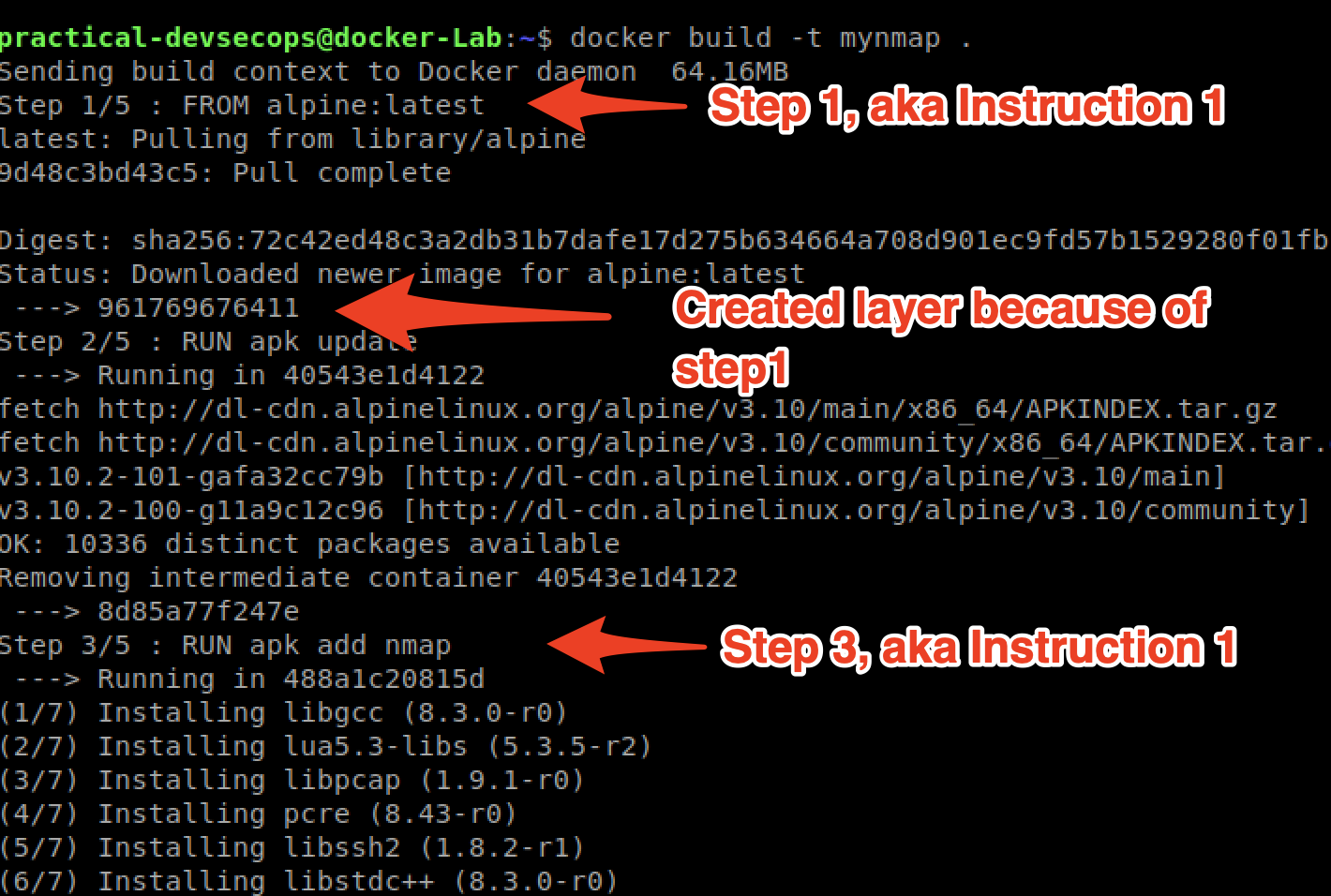

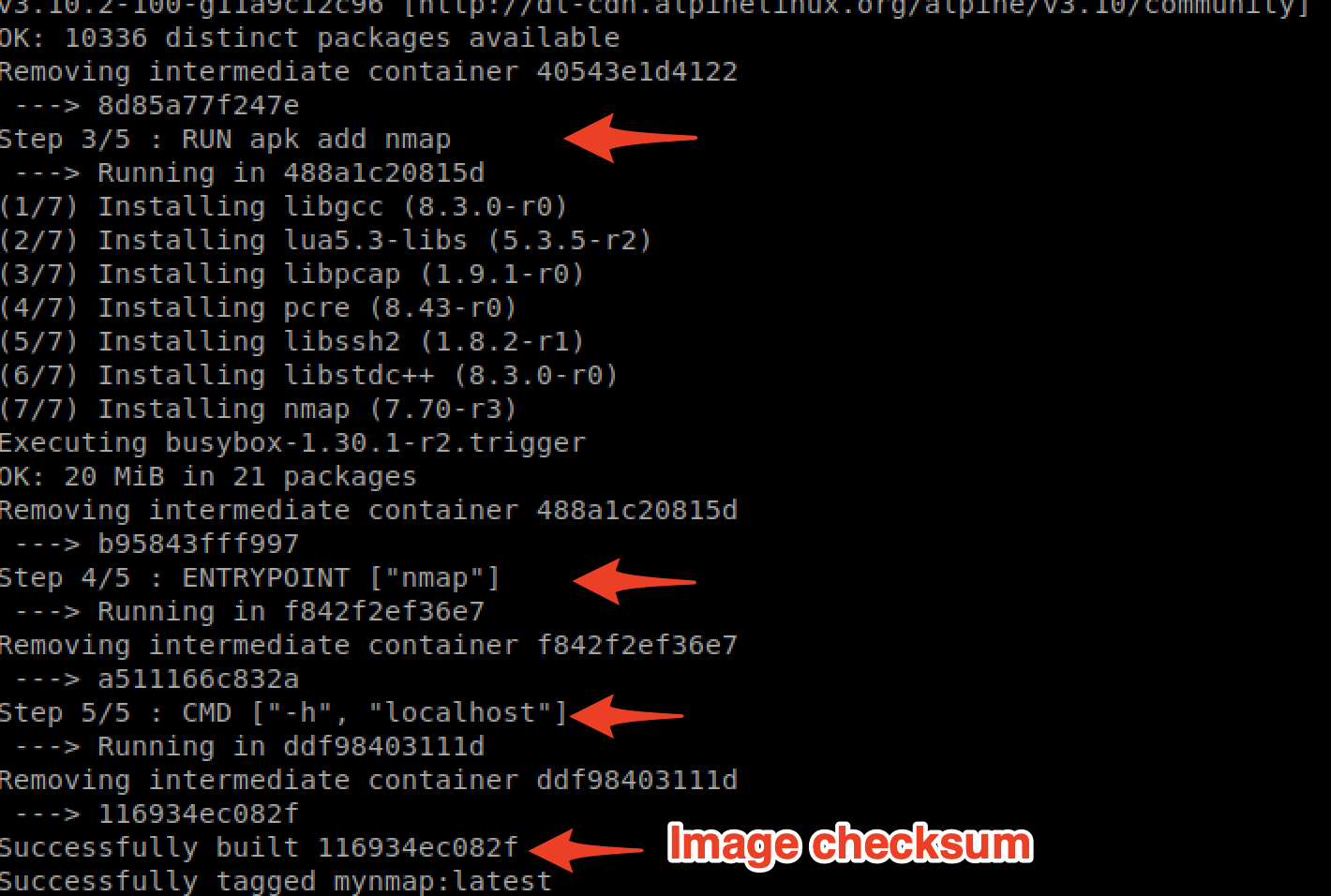

$ docker build -t mynmap .

Here the -t stands for a tag, a human-readable name for our container.

Congratulations, you just created a brand new image from the Dockerfile 🙂

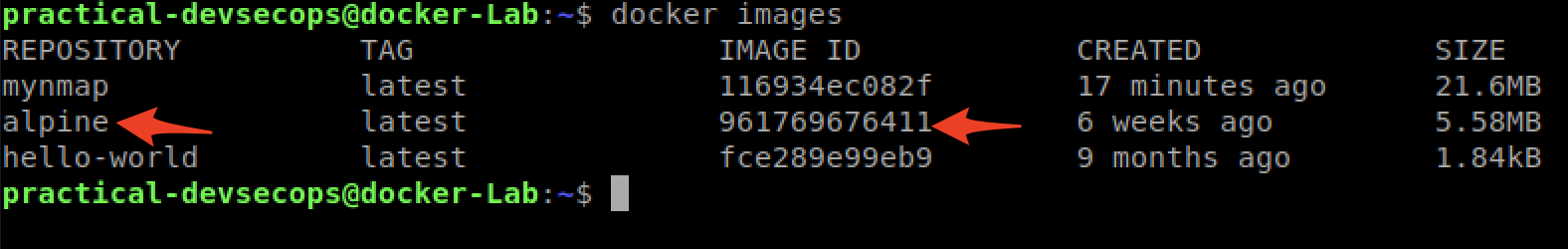

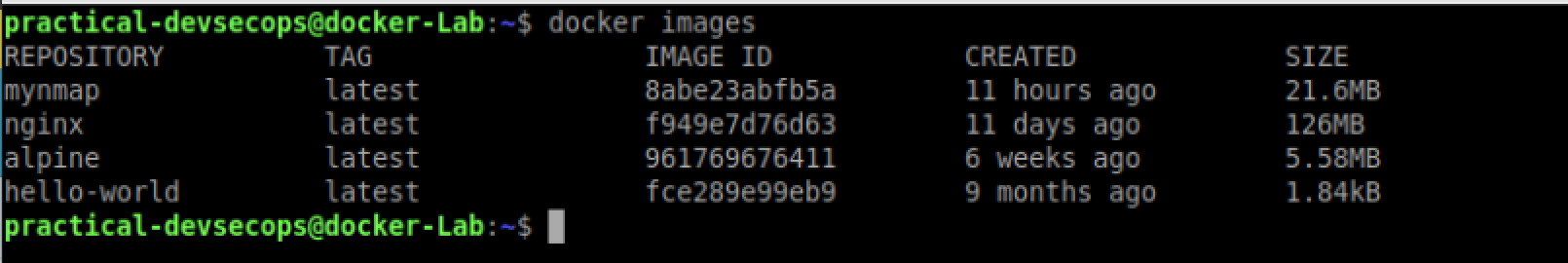

You can use docker images command to verify the mynmap image got created.

$ docker images

You can see, the mynmap image with image id “116934ec082f” is present. Also, do keep in mind the alpine’s image ID, we will come back to it shortly.

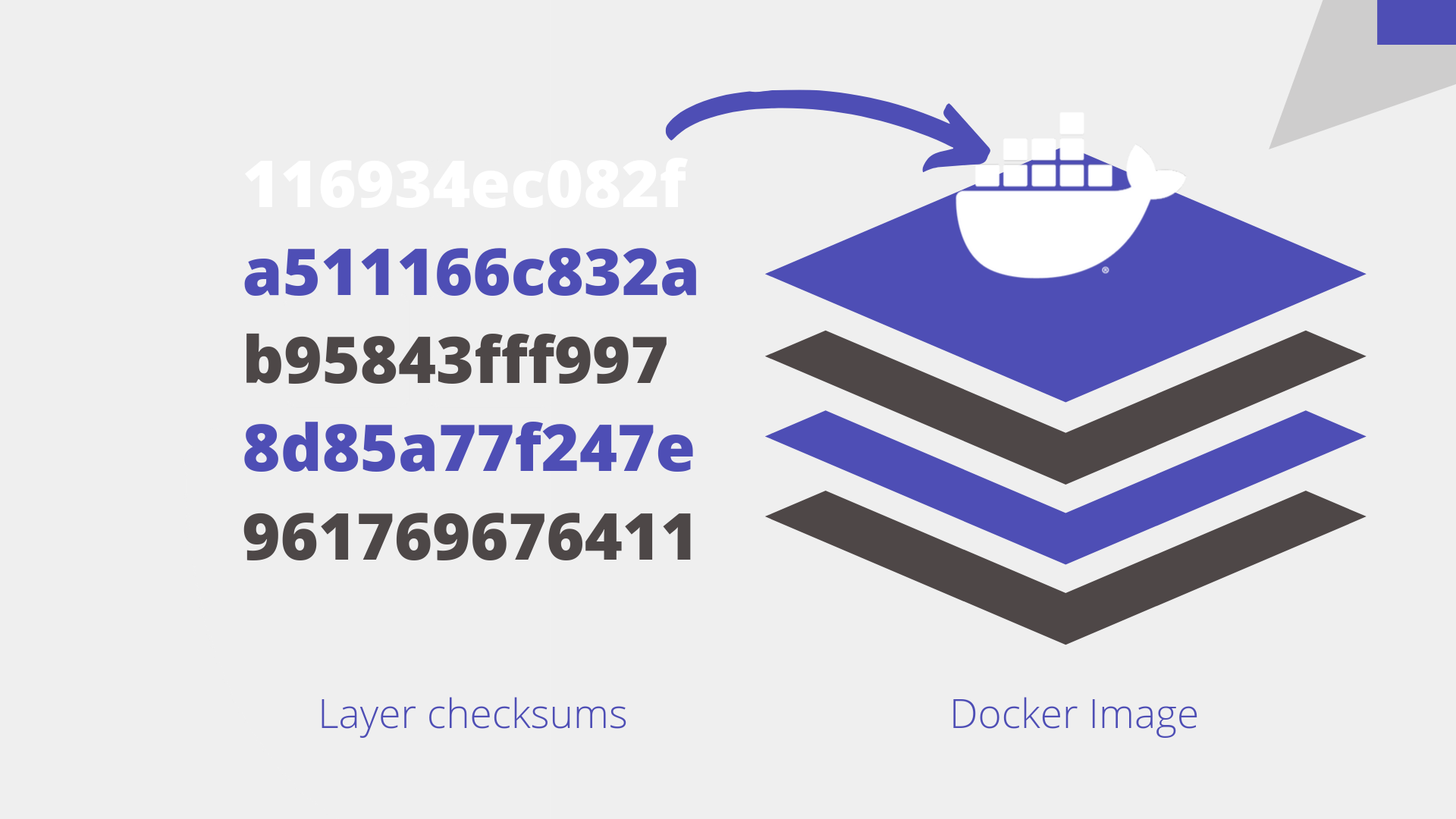

Docker Image Layers

When we execute the build command, the daemon reads the Dockerfile and creates a layer for every command.

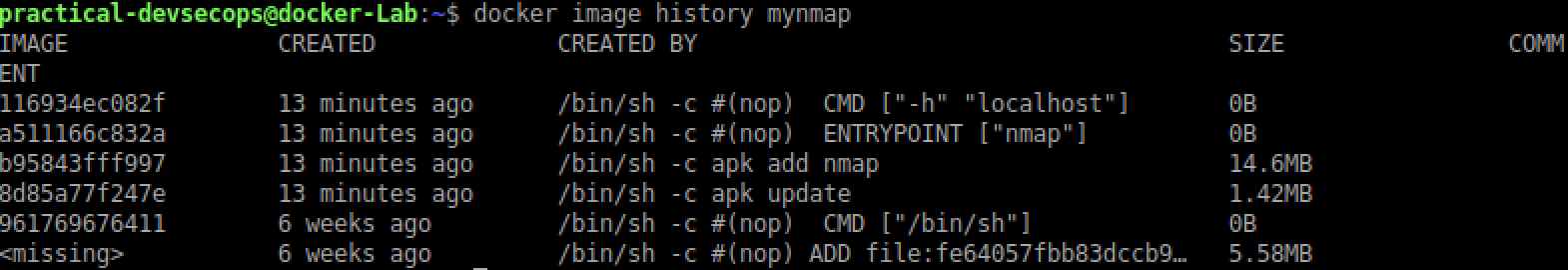

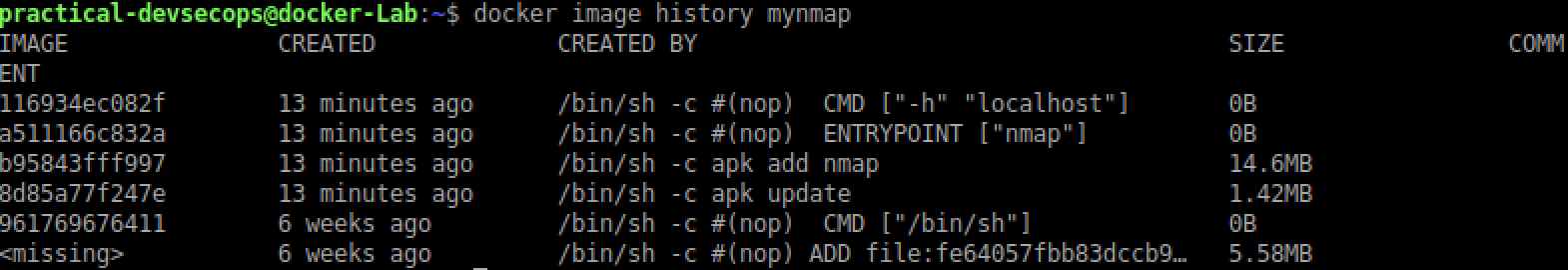

Let’s try to find the layers on the container, we just created. We can use the docker image history command to see image layers.

$ docker image history mynmap

In the above output, we have about 5 lines (please ignore the last <missing> line for now). How many instructions do we have in our Dockerfile? Five.

If you look closely, each layer corresponds to an instruction in the Dockerfile.

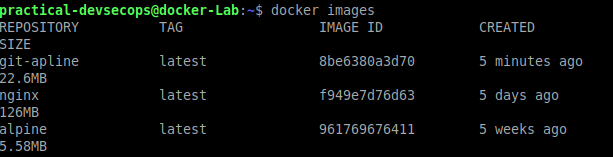

The <missing> line and the 961769676411 layer above it (#(nop) CMD “bin/sh”) are of the alpine:latest. How do I know that? let’s re-run docker images command.

As you can see, the image id for alpine image is 961769676411 so, the first layer is the alpine image.

The <missing> line means that alpine image layers are built on a different system and are not available on our lab machine.

Also, these layers are read-only(hello immutability). When you run a container, the docker daemon creates a readable/writeable layer (aka container layer) on top of these read-only layers.

The docker image history doesn’t always show uniform information e.g., when you pull the image from the docker hub (more on docker hub later) instead of creating it locally.

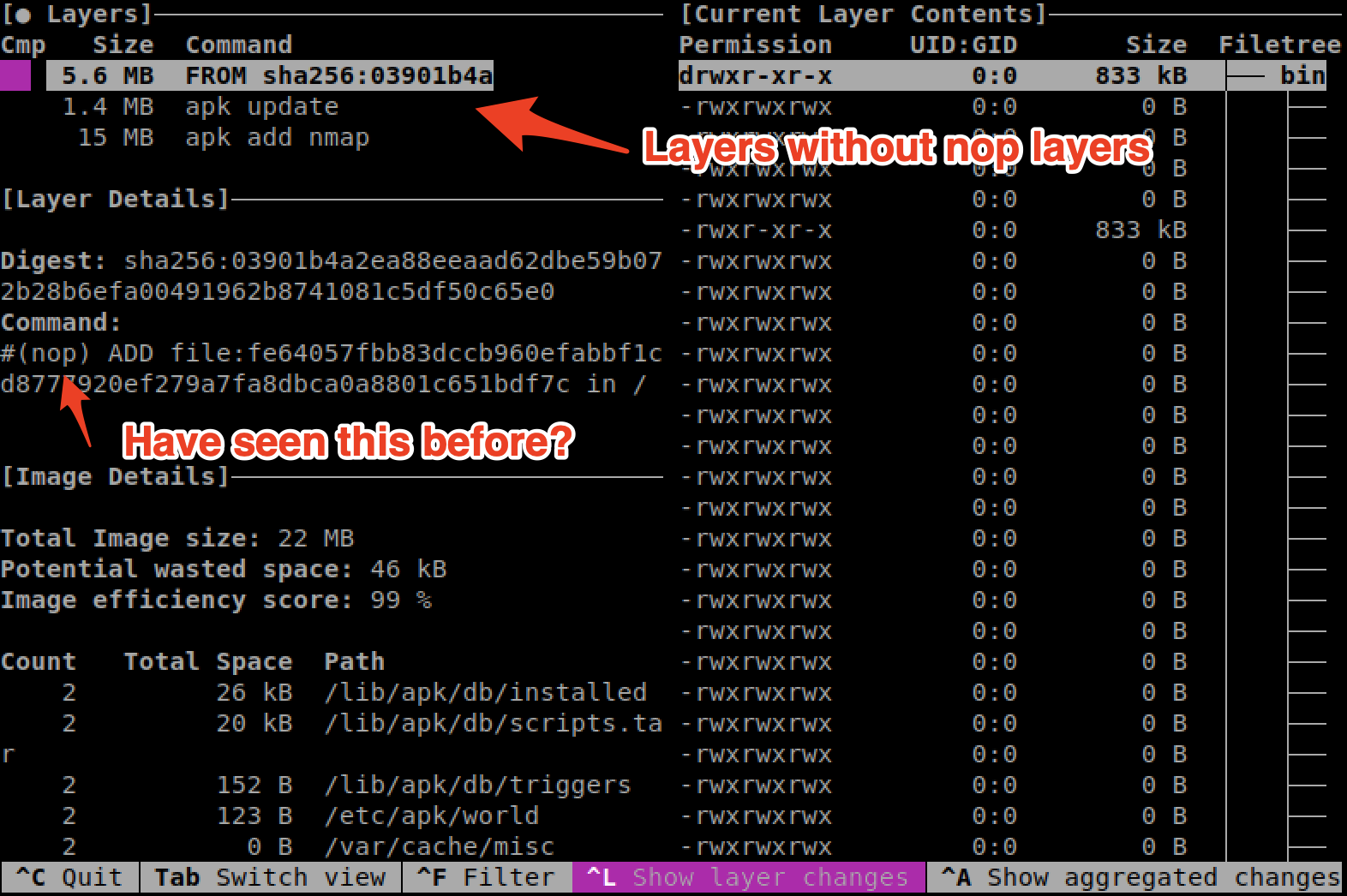

For this reason, we are going to use a tool called, Dive tool which takes care of various scenarios and shows a uniform output.

Let’s go ahead and install this tool by opening up a terminal and executing the following commands.

$wget https://github.com/wagoodman/dive/releases/download/v0.8.1/dive_0.8.1_linux_amd64.deb

The above command downloads the Dive tool. Install the downloaded dive package by using sudo privileges (use password docker when prompted)

$ sudo apt install ./dive_0.8.1_linux_amd64.deb -y

Let’s try to use the tool on the mynmap image, using the following syntax.

$ dive mynmap

What? why do we have 3 layers now instead of 5? Those are still there, just that the Dive tool doesn’t show them as its a no-operation (nop). If you revisit the following output, you will see there is a #(nop) in some of the instructions.

Why Docker uses layers?

Now, you might ask, why build an image with multiple layers when we can just built it in a single layer?

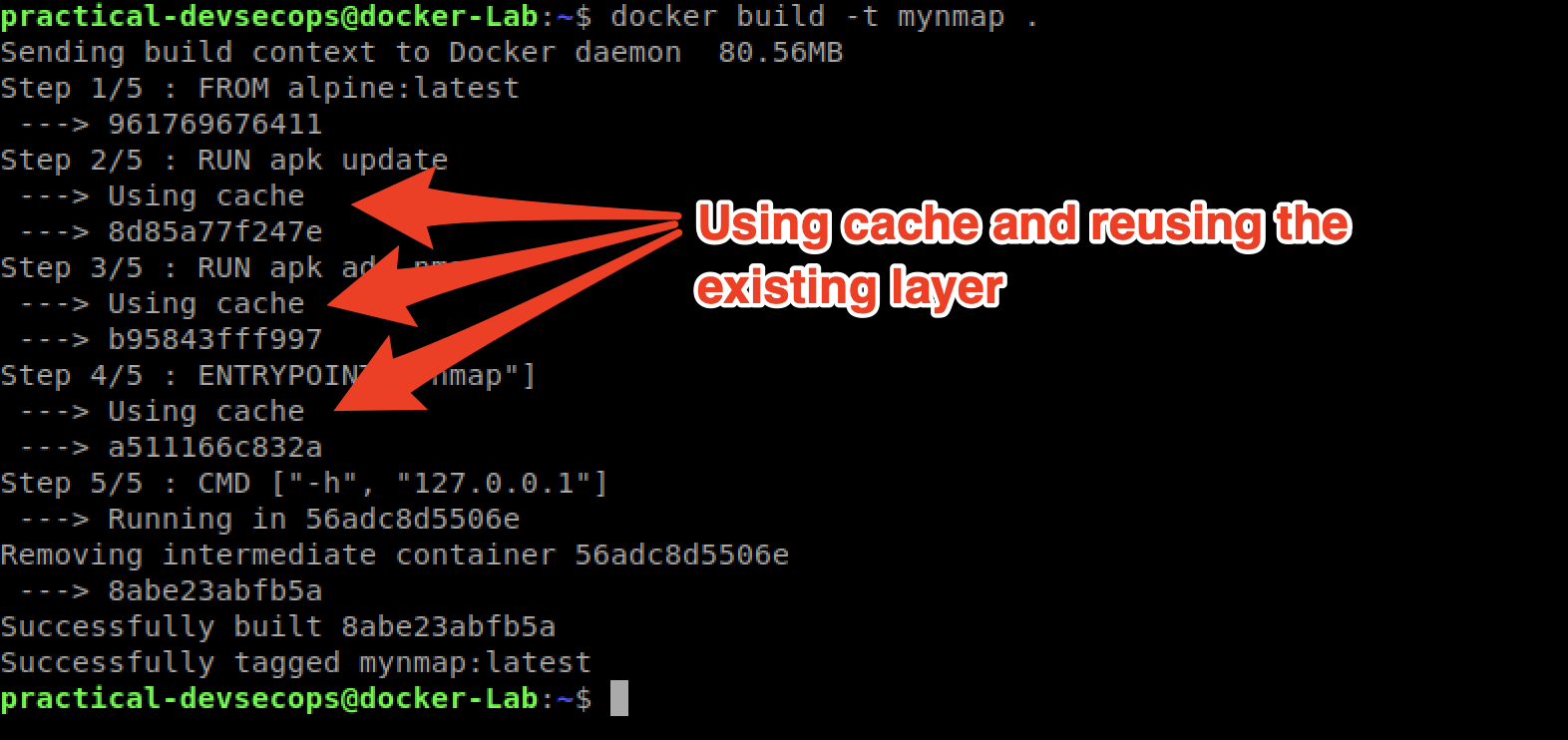

Let’s take an example to explain this concept better, let us try to change the Dockerfile we created and this time instead of scanning the localhost, let’s scan 127.0.0.1.

Once you have made this change in the Dockerfile, it should be as follows.

# Dockerfile for creating a nmap alpine docker Image FROM alpine:latest RUN apk update RUN apk add nmap ENTRYPOINT ["nmap"] CMD ["-h", "127.0.0.1"]

Now, rebuild the image using docker build.

As you can see that the image was built using the existing layers from our previous docker image builds. If some of these layers are being used in other containers, they can just use the existing layer instead of recreating it from scratch

This provides a few benefits for us.

- Efficient disk/image storage without creating duplicates.

- Faster image creation as we don’t have to rebuild them on every build.

- Reduces image download size if the layers already exist on the local machine.

That’s why Docker uses multiple layers instead of a single layer.

Task

Use the whaler tool on the mynmap image and explore various features provided by the tool. Write down, how this tool is different than the Dive tool.

Installation Instruction for Whaler.

Step 1: Download the Binary.

$wget https://github.com/P3GLEG/Whaler/releases/download/1.0/Whaler_linux_amd64

Step 2: Give executable permissions to the downloaded whaler binary.

$ chmod +x Whaler_linux_amd64

Step 3: Run whaler on the docker image.

Usage

$ ./Whaler_linux_amd64 <image-name>

Note: You can create docker images in another way, please see Appendix A for more details.

So far, we have seen Dockerfile and how it can be used to create docker images, but how do we share these images with others in the organization, world, etc.,

This is where the concept of the docker registry comes into the picture.

Docker Registry and Docker Hub

Docker Registry

A Docker Registry is a storage and distribution system for named docker images.

In simple terms, a registry is a software service(app store) to store and share docker images.

Docker stores docker images into repositories. Each repository can have multiple versions of an image e.g., nginx:1.14, nginx:1.15, etc., If no image version is given, the latest is used by default.

The Docker registries can be private or public, self-hosted or cloud-based. You can use cloud-based registries or deploy your own docker registries.

They both have pros and cons, let’s explore them briefly.

| Self-hosted (aka internal) | Cloud-based (aka public) |

| Single-tenant, more secure as they are internal to an organization. | Multi-tenant, might not be as secure as internal registries. |

| Costly to maintain and use. | Affordable because of the economy of scale. |

| Ideal for highly regulated industries (military, finance, etc.,) | Ideal for general use cases. |

| More control on the registry and its underlying infrastructure. | Less control on the registry and underlying infrastructure. |

| Provides enterprise features like LDAP authentication, image scanning, and signing. | Free cloud-based registries do not provide enterprise features however their paid alternatives do provide these features. |

Let’s explore cloud-based registries first and then self-hosted registries.

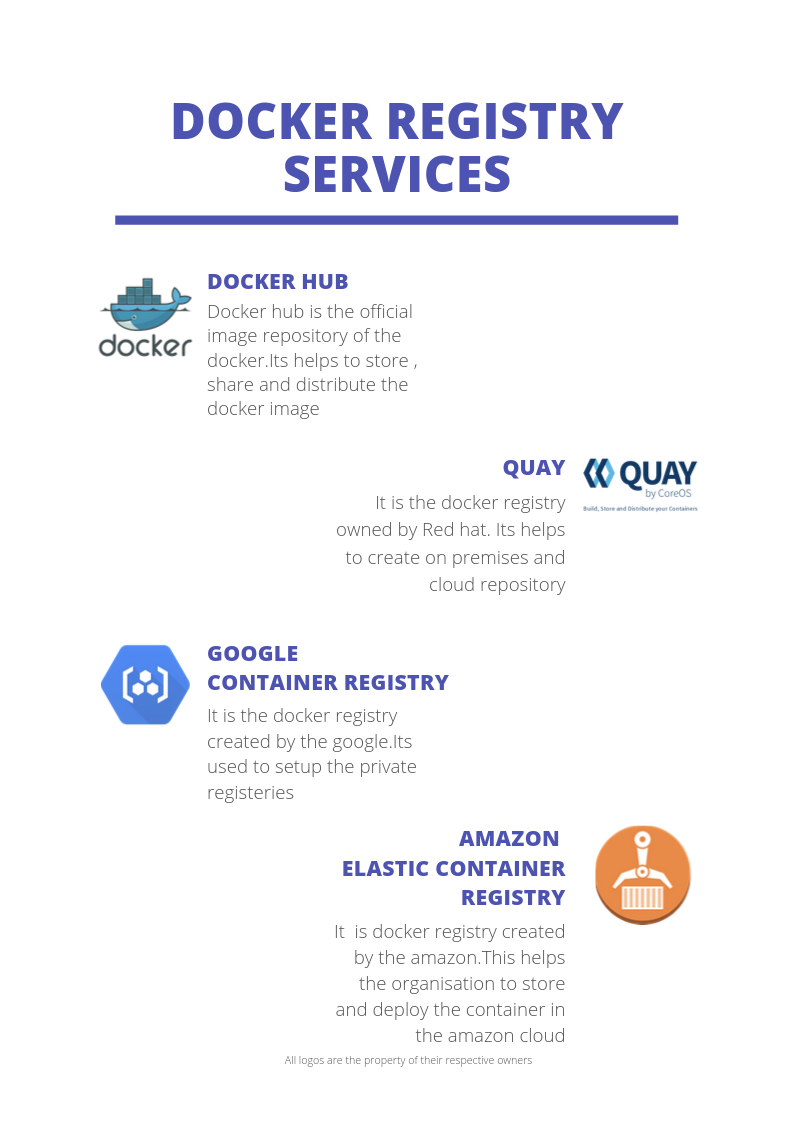

Docker Hub

Docker Hub is the world’s largest registry of publicly available container images. It is the default registry for the docker.

Apart from Docker hub, there are many well-established cloud registries out there, some of them are:

- Quay

- Google Container Registry

- Amazon Elastic Container Registry

As we mentioned before, the docker hub is the default registry for Docker. Whenever the docker daemon needs an image, it retrieves it from the Docker hub.

As we mentioned before, the docker hub is the default registry for Docker. Whenever the docker daemon needs an image, it retrieves it from the Docker hub.

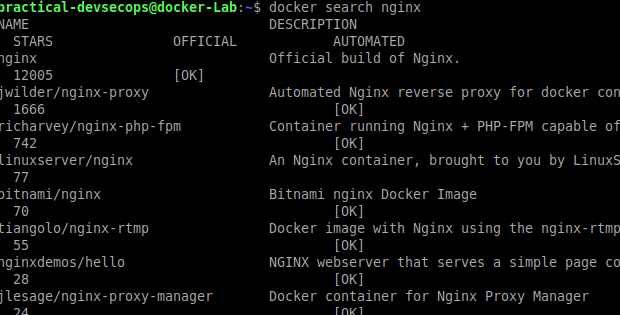

You can search for docker images in the hub using the docker search command

$ docker search <image-name>

For this example, we are looking for an Nginx image. We can use the following command.

$ docker search nginx.

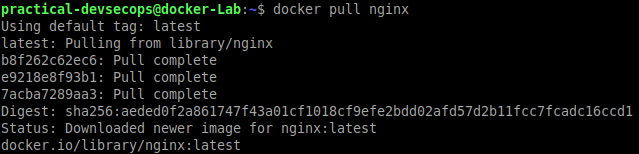

As you can see there are multiple images available out there, let’s download the first nginx image using docker pull command.

$ docker pull <image-name>

By default, the latest image will be pulled from the docker hub. We can check for the downloaded Nginx image using the docker images command.

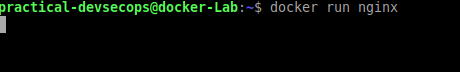

Let’s run the nginx image using the following command.

$ docker run <image-name>

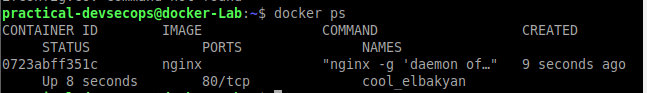

Open another terminal/tab and type docker ps to see the list of the running containers in your machine.

Go back to the previous terminal/tab and stop the running nginx container by using ctrl+c in the terminal. We can check the status of the running containers be re-running the docker ps command. You will see that the previous nginx container is no longer running.

Once we are done using nginx image, we can remove the image from the local machine using docker rmi command

$ docker rmi <image-name> - -force

— force tag is used to remove image forcefully

If you are our paid customer and taking any of our courses like CDE/CDP/CCSE/CCNSE, please use the browser based lab portal to do all the exercises and do not use the below Virtual Machine.

Task

Remove the Nginx image and run the Nginx container again without pulling it from the repository.

Self-hosted Docker registry

As we discussed before, we can host our own local registry. We will use docker to setup the Docker registry 🙂

Open up a terminal and run the following command.

$ docker run -d -p 5000:5000 --restart=always --name registry registry:2

We are using the registry image provided by docker to host our local docker registry. Please ignore the new command options, for now. We will cover these options in the next lesson.

–restart = Unless stopped manually, please restart the container if it dies for some reason.

-d = detached mode, run the container in the background.

-p = port mapping, host_port:container_port, expose port 5000 of registry on localhost:5000

-name = custom name

We created the mynmap container in the last exercises, let’s tag it as version 1.0 and upload it to the local registry.

$docker tag mynmap:latest localhost:5000/mynmap:1.0

Upload the container to our repository using the below push command

$ docker push localhost:5000/mynmap:1.0

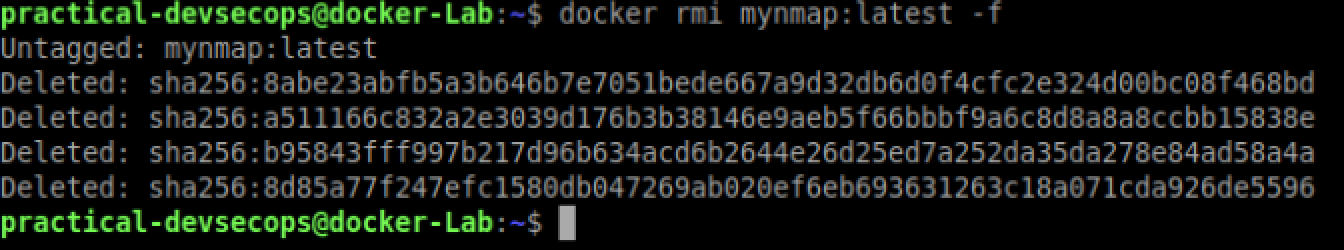

Now remove the locally available container by typing the below command in the terminal

$ docker rmi localhost:5000/mynmap:1.0

$ docker rmi mynmap:latest -f

Type the below command to pull the image from the local docker registry.

$ docker pull localhost:5000/mynmap:1.0

We can use this newly downloaded mynmap image as usual.

$ docker run localhost:5000/mynmap:1.0 localhost

Task

Create a new docker image using a Dockerfile with Nginx and run it on port 80. Once you have created the image, push it to the local registry.

Reference

Lesson 1, Understand Docker from a security perspective – https://www.practical-devsecops.com/lesson-1-understand-docker-from-a-security-perspective/

Conclusion

You deserve a pat on your back for reading up to here. We have covered a lot of ground today especially Docker images, Docker Image layers, and Docker Registries. With this strong foundation, you can perform vulnerability assessments on the docker images.

In the next lesson, we will be learning about docker containers, docker networking, and docker volumes. You will also see how to manage multiple containers using docker-compose.

P.S. Remember to comment here if you have any questions or comments.

Appendix A

Another way to create a docker image.

The idea behind this technique is to create a new container with the desired base image and then run commands to set it up. Once you are satisfied with the container behavior, you export this container into an image.

For this example, we will be using an alpine image, install git in it and then create a new image out of it.

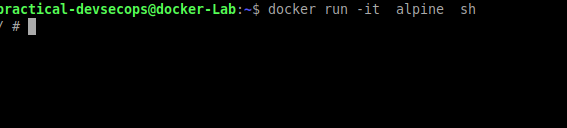

Step 1: run an alpine container and access the shell.

$ docker run -it alpine sh

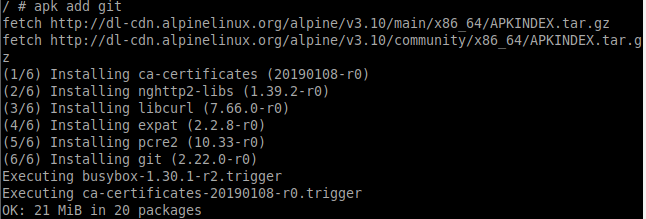

Step 2: Install git using the below command

$ apk add git

Exit out of the container by typing exit in the container shell.

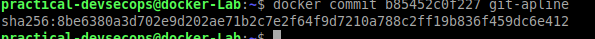

Step 3: Commit the new changes made on the container layer(write layer) to create a new image.

$ docker commit <container-id> <name>

You can verify the creation of a new image by using docker images command.

$ docker images

We have successfully created the docker image using ad-hoc commands. This definitely helps in creating a one-off image for debugging purposes but is not a recommended practice.

Very nice explanation, I wonder how do your other courses (CDP, CDA, CDE) look like.

Maybe a discount for me 🙂

Thank you Tomi for the kind words.

Sure, We would like to give discounts to our readers 🙂

I will reach out to you via email, thanks.

Awesome lesson, I learned some new cool stuff

New stuff as soon as lesson#2.

Nice.

Thanks !

Your concept explanation is super simple. This is one of the simplest and detailed docker learning tutorial I came across. Great job.

Hi Imran

Is there somewhere to check whether we have correctly done the tasks correctly? I’m sure if I have done the last one right or not.

Our slack channel 🙂 check lesson 3 for instructions

Hi Imran,

Thanks for these articles. They are very clear and to-the-point. I’ve already shared the links to my colleagues/friends as well.

Keep continuing the great work!

Am I the only one who is procrastinating 🙁

You can start today 🙂

Another great lesson – I didn’t know about whaler and it is very useful. Cheers.

Glad to know that mate. Talk to you soon

Hi Imran It’s been very good going so far. One question when I try to run the mynamp container I see the following error. Any help?

docker run mynmap localhost

localhost: line 1: [“nmap”]: not found

That’s because there are special characters in your Dockerfile file, if you type them manually. It should fix the issues.

Hi Imran,

Ran the container by removing Nginx and observed that container is locally searching for image , if not available it is pulling the image directly from hub and running it. Please correct me if I am wrong.

Thank You…!!!

You are right, thats the default behaviour of Docker 🙂

Hi Imran,

How can we list the images stored in self host registry?

Sharath, you can use the following command to fetch information about the available images in the registry

curl -X GET https://myregistry:5000/v2/_catalogHi Imran,

I am getting an error saying access denied for the below cmd:

docker tag nginx:latest nginx:1.0

docker push nginx:1.0

but the error got resolved if I do the same in the following way

docker tag nginx:latest localhost:5000/nginx:1.0

docker push localhost:5000/nginx:1.0

May I Know the reason behind this behavior?

Thank You..!!!

Hi Sharath,

Can you please check your email, I have added you to our course channel. We can help you resolve issues there.

Thanks. That solved.

Hi Imran,

Thanks for clearly explaining things how it works and its importance from security perspective. Does this course also has labs on Windows 10 environment.

Can you please add me to the course channel.

Hi Imran,

In Appendix A

there is command docker commit after installing git

How I can get the .

thanks.

Hi Imran,

When i run the command docker run -d -p 5000:5000 -restart=always -name registry registry:2

it is showing the following error

unknown shorthand flag: ‘r’ in -restart=always

See ‘docker run –help’.

This lession was an eye opener to me and i was amazed about the detailed expanation shared by you.

Thanks for sharing such a great info with us.

Hi Imran,

Great content with simplified descriptions and exercises!

Thanks,

Indrajit.

Awesome work Imran & Team for the brilliant and concise content.